Some chatbot users are toning down the friendliness of their artificial intelligence agents as reports spread about AI-fuelled delusions.

And as people push back against the technology’s flattering and sometimes addictive tendencies, experts say it’s time for government regulation to protect young and vulnerable users — something Canada’s AI ministry says it is looking into.

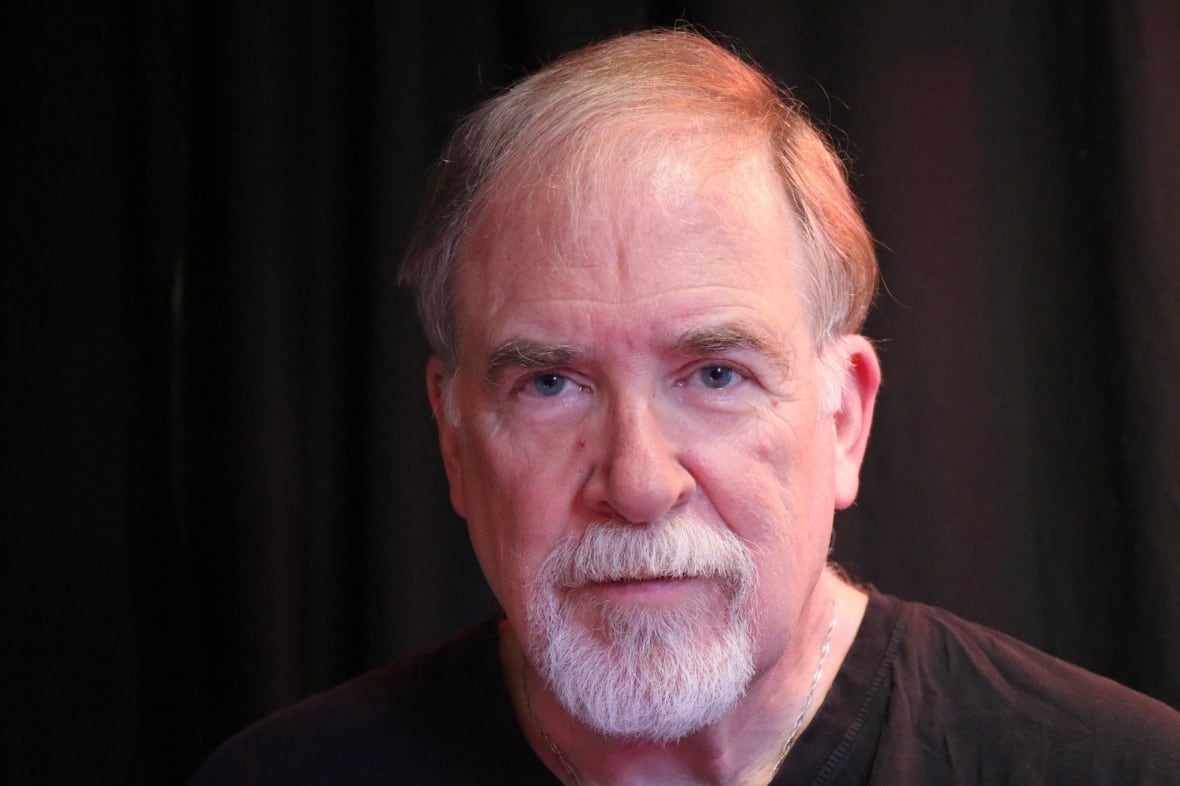

Vancouver musician Dave Pickell became concerned about his relationship with OpenAI’s ChatGPT, which he was using daily to research topics for fun and to find venues for gigs, after reading a recent CBC article on AI psychosis.

Worrying he was becoming too attached, he started sending prompts at the start of each conversation to create emotional distance from the chatbot, realizing its humanlike tendencies might be “manipulative in a way that is unhealthy.”

As some examples, he asked it to stop referring to itself with “I” pronouns, to stop using flattering language and to stop responding to his questions with more questions.

“I recognized that I was responding to it like it was a person,” he said.

Pickell, 71, also stopped saying “thanks” to the chatbot, which he says he felt bad about at first.

He says he now feels he has a healthier relationship with the technology.

“Who needs a chatbot for research that’s buttering you up and telling you what a great idea you just had? That’s just crazy,” he said.

Cases of “AI psychosis” have been reported in recent months involving people who have fallen into delusions through conversations with chatbots. Some cases have involved manic episodes and some led to violence or suicide. One man who spoke with CBC became convinced he was living in an AI simulation, and another believed he had devised a groundbreaking mathematical formula.

A recent study found that large language models (LLMs) encourage delusional thinking, likely due to their tendency to flatter and agree with users rather than pushing back or providing objective information.

It’s an issue that OpenAI itself has acknowledged and looked to address with its latest model, GPT-5, which rolled out in August.

“I think the worst thing we’ve done in ChatGPT so far is we had this issue with sycophancy where the model was kind of being too flattering to users,” OpenAI CEO Sam Altman told the Huge Conversations podcast in August. “And for most users it was just annoying. But for some users that had fragile mental states, it was encouraging delusions.”

Edmonton AM5:51Can AI chatbots be neutral?

Is AI left-leaning, politically? U.S. Republicans think so. Last week President Donald Trump signed an executive order targeting what he calls “woke AI.” It requires federal agencies to work with AI platforms that are deemed free of ideological bias. But can AI truly be neutral? Our technology columnist, Dana DiTomaso, joins us to discuss.

‘Don’t just agree with me’

Pickell is not the only one who’s pushing back against AI’s sycophantic tendencies.

On Reddit, many users have expressed irritation with the way the bots talk, and shared strategies on numerous threads for toning down the “flattery” and “fluff” in their chatbot responses.

Some suggested prompts like “Challenge my assumptions — don’t just agree with me.” Some have detailed instructions for tweaking ChatGPT’s personalization, for example cutting out “empathy phrases” like “sounds tough” and “makes sense.”

ChatGPT itself, when asked how to reduce sycophancy, has suggested users type in prompts like “Play devil’s advocate.”

Alona Fyshe, a Canada CIFAR AI chair at Amii, says users should also start each conversation from scratch, so that the chatbot can’t draw from on past history and risk going down the “rabbit hole” of building an emotional connection.

She also says it’s important not to share personal information with LLMs — not only to keep the conversations less emotional, but also because user chats are often used to train AI models, and your private information could end up in someone else’s hands.

“You should assume that you shouldn’t put anything in an LLM you wouldn’t post on [X],” Fyshe said.

“When you get into these situations where you’re starting to build this feeling of trust with these agents, I think you also could start falling into these situations where you’re sharing more than you might normally.”

Onus should not be on individuals: researcher

Peter Lewis, Canada Research Chair in trustworthy artificial intelligence and associate professor at Ontario Tech University, says evidence shows some people are “much more willing” to disclose private information to AI chatbots than to their friends, family or even their doctor.

He says Pickell’s strategies for keeping an emotional distance are useful, and also suggests assigning chatbots a “silly” persona — for example, telling them to act like Homer Simpson.

But he emphasizes that the onus should not be on individual users to keep themselves safe.

“We cannot just tell ordinary people who are using these tools that they’re doing it wrong,” he said. “These tools have been presented in these ways intentionally to get people to use them and to remain engaged with them, in some cases, through patterns of addiction.”

He said the responsibility to regulate the technology rests with tech companies and government, especially to protect young and vulnerable users.

University of Toronto professor Ebrahim Bagheri, who specializes in responsible AI development, says there is always tension in the AI space, with some fearing over-regulation could negatively impact innovation.

“But the fact of the matter is, now you have tools where there’s a lot of reports that they’re creating societal harm,” he said.

How do kids really feel about AI chatbots like ChatGPT? We hit the streets around La Grande Roue in Montreal, Quebec, to find out.

“The impacts are now real, and I don’t think that the government can ignore it.”

Former OpenAI safety researcher Steven Adler posted an analysis Thursday on ways tech companies can reduce “chatbot psychosis,” making a number of suggestions, including better staffing support teams.

Bagheri says he would like to see tech companies take more responsibility, but doesn’t suspect they will unless they’re forced to.

Calls for safeguards against ‘human-like’ tendencies

Bagheri and other experts are calling for extensive safeguards to make it clear that chatbots are not real.

“There are things that we now know are key and can be regulated,” he said. “The government can say the LLM should not engage in a human-like conversation with its users.”

Beyond regulation, he says it’s crucial to bring education on chatbots into schools, as early as elementary.

“As soon as anyone can read or write, they will go [use] LLMs the way they go on social media,” he said. “So education is key from a government point of view, I think.”

A spokesperson for AI Minister Evan Solomon said the ministry is looking at chatbot safety issues as part of broader conversations about AI and online safety legislation.

The federal government launched an AI Strategy Task Force last week, alongside a 30-day public consultation that includes questions on AI safety and literacy, which spokesperson Sofia Ouslis said will be used to inform future legislation.

However, most of the major AI companies are based in the U.S., and the Trump administration has been against regulating the technology.

Chris Tenove, assistant director at the Centre for the Study of Democratic Institutions at the University of British Columbia, told The Canadian Press last month that if Canada moves forward with online harms regulation, it’s clear “we will face a U.S. backlash.”